Integrating Movable IoT Devices into AR Applications

This project is already assigned.

Motivation and Goals

Intensive care units and operating rooms are multitasking environments. Physicians and nurses are committed to monitor several machines and values, as well as patients. Previous work [6] has shown, that using head worn Augmented Reality (AR) glasses can increase situational awareness. AR glasses, e.g. the HoloLens from Microsoft, can visually enrich the environment with digital data. This might help physicians and nurses to fulfill their task efficiently and might raise the overall quality of the treatment. As these glasses don’t need touch interaction, they comply with hygiene restrictions. To collect and provide data from the environment, sensors and micro controllers need to be placed and interconnected. As the Internet of Things (IoT) is on the rise [3], more effort is put into place to make networks of low level computers more accessible to higher level applications. Platforms and frameworks are being developed and revisited but found to be difficult to understand [4]. Also, frameworks support different devices and are optimized for specific purposes respectively. Thus narrowing down the re-usability and setup speed for prototypes and leaving the potential for distant interaction unused.

To support connections with a prototype IoT network, a Gateway application will be provided. It shall feature simple value mappings, such as getting and setting values from and for micro controllers, streaming values, that is constantly getting updated data. Simple callback rules to trigger notifications when one or any sensor exceeds a given limit, are higher or lower level implementations. Thus, they can either be implemented as a micro controller functionality or an application logic.

To boost spacial awareness of staff using IoT information for AR applications in medical contexts, a position tracking system will be integrated into the IoT network. It should use the previously designed Gateway to publish its measurements to other applications. Thus allowing to join spatial properties for further gesture or directional interaction.

Related Work

Research tries to make an approach to IoT by defining important actors and wordings in the field. Internet of Things middleware is described here [4] as “the software which makes the bridge between data and device of the IoT platform”. They made great effort to group actors into middleware components, which tend to differ slightly for each reviewed platform.

Stating that the transmitted data between devices can reach massive amounts, they suggest, that machine-to-machine (M2M) connectivity should match the game. Although there is a large base of IoT frameworks available, “they require proper presentation in terms of framework and platforms” [4]. As developers need to know a framework and its abilities, it is recommended to learn from the concepts and adapt them for different purposes.

Another article about IoT architectures [3] derives an abstract architecture from the reviewed frameworks and provides a good simplification of the architecture introduced by [8]. The latter describes networks as three layer based, where the first layer is responsible for perception, hence consists of sensors and actuators or both encapsulated into devices. The second layer provides network activities e.g. through WIFI and the third layer implements the application logic. The bespoke article [3] however splits up the second layer and replaces its functionality into a Gateway system, which is responsible for communication with devices, and IoT Integration Middleware. Middleware is responsible for some logic implementation and data management. For simplicity sake, one can argue to dispose the logic layer, allowing logic implementation in either the IoT device itself or the application. This leads to an overall reduction of maintenance for the middleware application.

Research also shows convergence of AR and IoT [5] within the last years as AR seems to build up consumer potential. Thus, resulting in a very precis study about spatial selection techniques using an infrared beam mounted onto the AR glasses [7]. Micro controllers detect the infrared light beam and are able to report weather they are being looked at or not. Even though this technique provides a simple solution to detect the direction of the users sight within the real world, it might not be suitable for this project, as it is missing depth information. Also it is only available when the user is looking in the direction of a receiving micro controller. The controller will also not report its position, but return the intensity of the light beam. As this project requires positioning in view space, the ideas proposed do not apply.

AR frameworks also rely on direct line of sight and the ability of the computer to detect an image within a given video feed. However micro controllers are shaped in various ways. Their placement might be hidden, hence not supporting the visual recognition approach. An ultra sonic sound based indoor positioning system provided by Marvelmind uses its own beacons to measure movements of lightweight receiving devices. They use triangulation and therefore need a direct line of sight to at least three beacons at the same time. Advantages result in the accuracy of the system (± 2 cm) and the possibility to provide position information when there is not a direct line of sight to the user. With the provided spatial information, selections by raycasthit can be implemented. Ultra sonic sound might however interfere with systems for diagnostic context. Also the setup process is not designed for constant movement of the beacons, resulting in a challenging procedure.

Concepts

An Arduino board will be used to drive a digital continuous rotation feedback servo. The servo should function as an input and output device at the same time by being attached to a mimic rotary air valve. This setup can be used to test remote interactions. The further described system shall support multiple micro controllers from various brands at the same time. Connectivity between controllers and a server like application (Gateway) will be established using Ethernet or WIFI network. By using simple TCP/IP connections, all controllers featuring some kind of network capabilities (by cable or wireless) should be able to connect with the gateway and therefore (by implementing a simple protocol) send and receive information to and from the application layer.

The Gateway is a server like application that positions itself between a top level application and the low level micro controllers. It ensures communication from higher to lower level machines and vise versa. The Gateway is not responsible for specific action mappings (e.g. triggers). Higher functionality can either be implemented into the IoT device itself or into the application layer, allowing greater flexibility for function mapping.

The Gateway shall distinguish between different devices and their functionality and block faulty requests and commands, therefore improving the stability of the system. It should also allow to group different sensor data together when needed. The described functionality is supported by the MQTT protocol. MQTT is a very simple publish and subscribe protocol for character-string based communication via network. An MQTT Broker application provides a single access point for data collection and command publishing. MQTT clients such as micro controllers and main applications can connect to the Broker and publish payloads in various topics. Connecting a device doesn’t pose a barrier in the prototyping phase. As almost every network device features TCP/IP connections and can display simple strings of characters, this protocol can be used to connect non-network devices as well. The implementation of a e.g. simple readable Python script translating between MQTT and other connections (e.g. serial communication) can help to support even more devices.

To support tracking in 3D space, a Marvelmind indoor positioning system shall be integrated. Using a translating script might also be a solution to connect the Marvelmind system to the MQTT Broker. Further specifications can be made as soon as the ultra sonic system is tested. To set up the Marvelmind system, precise measurement between beacons is required. The obtained distances define the tracking space and are therefore important parameters in the driver launch process. The system then returns real world units (e.g. meters). To integrate the Marvelmind coordinate system into an application coordinate system (e.g. HoloLens or Unity), spatial alignment and transformation is necessary.

Methodology

The project will start by assembling the micro controller for testing purposes. Other devices providing various basic functions might be used to test the setup beforehand. As these controllers might not feature WIFI or Ethernet access a Bridge application that translates between USB serial and network communication can be provided.

An open source MQTT Broker application will be used to provide the gateway functionality. The Eclipse Mosquitto software is well documented and easy to use. It runs on all main computer platforms including the Raspberry Pi system. As MQTT is an ISO ratified protocol, Apps providing MQTT Broker and client functionality exist. All data provided by MQTT shall be accessible within a Unity Game Engine application, which shall be executed on a Microsoft HoloLens hardware. To reach this goal, a Unity package from Github can be used. Both packages found [1, 2] are free to use, modify and publish but without warranty (MIT License).

The Marvelmind ultrasonic position tracking system will connect via USB to a computer. By using a lightweight Python script, a translation between Marvelmind API and MQTT communication can be established. The Marvelmind coordinate system needs to be merged or recalculated to fit the coordinate system of the HoloLens which is created by keeping track of salient points within the surrounding. As there will be a bridge application available, it might be convenient to program the alignment of coordinate systems (calibration) in this script. By adding the calibration functionality to the script, users might be able to extract the actual data provided, but also get “real world data” when a short calibration sequence finished successfully. To archive this, data points of the corresponding HoloLens coordinate system need to be transmitted to the bridge application where they will be joined with the raw data and used to compute a homography matrix. This matrix then helps to transform the points into the HoloLens coordinate system.

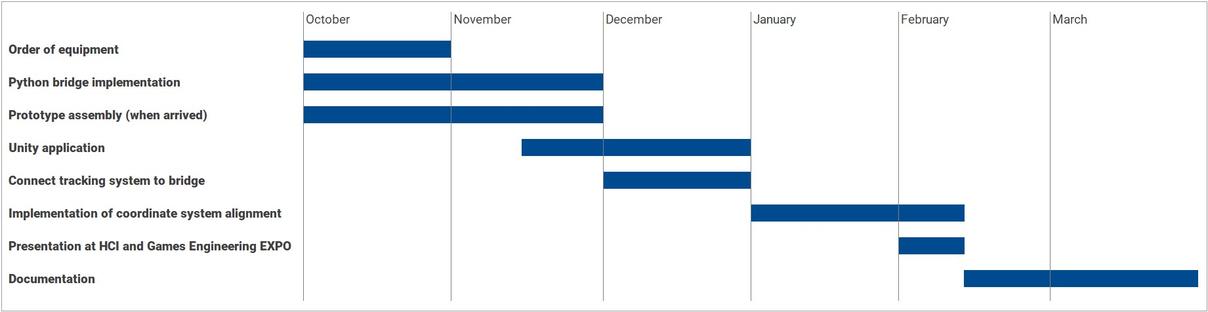

Work Schedule

|

|

References

-

M2MQTT for Unity gpvigano package on github.https://github.com/gpvigano/M2MqttUnity. Accessed: 22.11.2020.

-

Unity 3D MQTT vovacooper package on github. https://github.com/vovacooper/Unity3d_MQTT. Accessed: 22.11.2020.

-

J. Guth, U. Breitenb ̈ucher, M. Falkenthal, F. Leymann, and L. Reinfurt. Comparison of iot platform architectures: A field study based on a reference architecture. In2016 Cloudification of the Internet of Things (CIoT), pages 1-6, 2016.

-

Sumanta Kuila, Namrata Dhanda, Subhankar Joardar, and Sarmistha Neogy. Analytical survey on standards of internet of things framework and platforms. InEmerging Technologies in Data Mining and Information Security, pages 33-44. Springer, 2019.

-

Nahal Norouzi, Gerd Bruder, Brandon Belna, Stefanie Mutter, Damla Turgut, and Greg Welch. A systematic review of the convergence of augmented reality, intelligent virtual agents, and the internet of things. In Artificial Intelligence in IoT, pages 1–24. Springer, 2019.

-

Paul Schlosser, Tobias Grundgeiger, and Oliver Happel. Multiple patient monitoring in the operating room using a head-mounted display. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, pages 1-6, 2018.

-

Ben Zhang, Yu-Hsiang Chen, Claire Tuna, Achal Dave, Yang Li, Edward Lee, and Bjorn Hartmann. Hobs: head orientation-based selection in physical spaces. In Proceedings of the 2nd ACM symposium on Spatial user interaction, pages 17-25, 2014.

-

Lirong Zheng, Hui Zhang, Weili Han, Xiaolin Zhou, Jing He, Zhi Zhang, Yun Gu, Junyu Wang, et al. Technologies, applications, and governance in the internet of things. Internet of things-Global technological and societal trends. From smart environments and spaces to green ICT, 2011.

Contact Persons at the University Würzburg

Dr. Florian Niebling (Primary Contact Person)Mensch-Computer-Interaktion, Universität Würzburg

florian.niebling@uni-wuerzburg.de